As artificial intelligence (AI) expands beyond data centers and into edge devices, the demand for high-speed, low-latency connectivity has never been greater. Edge AI inference, where computation occurs locally on devices such as smart cameras, industrial sensors, or autonomous vehicles, requires rapid data transfer between local nodes to deliver real-time intelligence. Traditional networking solutions, like 10G or standard 25G links, often struggle to balance the need for high throughput with low power consumption and compact form factors. Enter 25G SR (Short Reach) modules, designed to address these precise challenges.

Why Low Latency Matters

Edge AI workloads demand immediate responsiveness. Unlike cloud-based AI, where milliseconds of delay are often acceptable, local inference applications, such as anomaly detection in industrial IoT, autonomous navigation, or augmented reality, require microsecond-level data exchanges. Any latency in communication between edge devices can compromise system performance, safety, or user experience. 25G SR modules offer a clear advantage, providing ultra-fast optical links that minimize transmission delays while supporting multiple local device interconnections.

Compact Form Factor for Dense Edge Deployments

One of the unique challenges of edge AI is the physical environment. Edge servers, often deployed in factories, retail outlets, or urban infrastructure, have limited space. Traditional transceivers can be too large or power-hungry for these scenarios. 25G SR modules, however, feature a small form factor optimized for high-density deployment. This enables network engineers to connect multiple devices in a single rack or enclosure without compromising airflow, power efficiency, or scalability. The compact design also facilitates modular upgrades, allowing edge networks to evolve as AI workloads grow.

Low Power Consumption: Efficiency Meets Performance

Power efficiency is another critical factor at the edge. Many edge AI devices operate in environments where energy availability is constrained, such as remote monitoring stations or battery-powered systems. SFP28 SR modules consume significantly less power compared to higher-speed alternatives like 100G, making them ideal for sustained operation in edge deployments. This low-power profile reduces operational costs, simplifies thermal management, and ensures long-term reliability for mission-critical applications.

Seamless Integration for Edge AI Inference

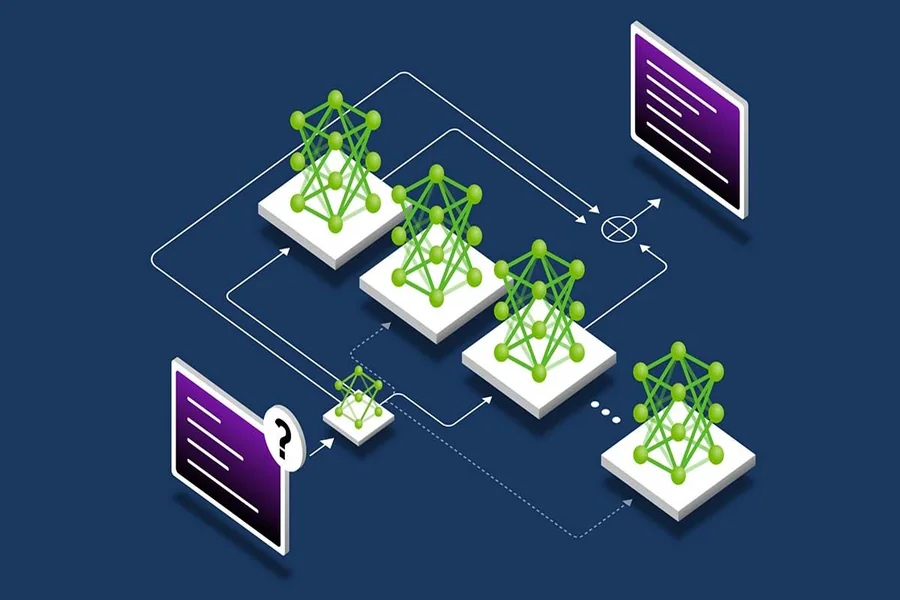

25G SR modules support standard LC duplex optical connections and are compatible with existing edge networking equipment. This interoperability enables seamless integration into diverse architectures, from local data aggregation points to micro data centers. Their capability to support high-speed, low-latency links between multiple nodes ensures that AI models can quickly share intermediate results, perform distributed inference, and respond to real-world events in real time.

Conclusion

As AI continues to migrate to the edge, networking solutions must keep pace with the stringent demands of latency, power efficiency, and compact design. 25G SR modules provide the optimal balance, enabling high-speed interconnects between local devices while minimizing energy consumption and space requirements. For organizations deploying edge AI inference solutions, 25G SR is not just a component, it is a critical enabler of real-time intelligence at the network edge.